Personal Knowledge Management Systems should have Embeddings

Embeddings are what gives AI intuition and fast thinking

As a result of training on big text data, Large Language Models (LLM) acquired probability distributions for the most likely next token in a sequence. The tokens can be words, sentences, or paragraphs. This is what makes it fluent in conversations.

Probability distributions are updated during conversations.

Another result of its training is the embeddings. Words, sentences, and paragraphs are embedded in a very high-dimensional vector space, which can have a distance metric, e.g. Cosine similarity.

These embeddings represent tokens as points and the distances become a proximity measure between words, sentences, etc.

An excellent tutorial on embeddings can be found in an article by Cohere.ai: "What Are Word and Sentence Embeddings?"

"Word and sentence embeddings are the bread and butter of language models." - Cohere.ai

Embeddings are very useful for neighborhood searching, clustering, classification, recommendations, and even anomaly detection.

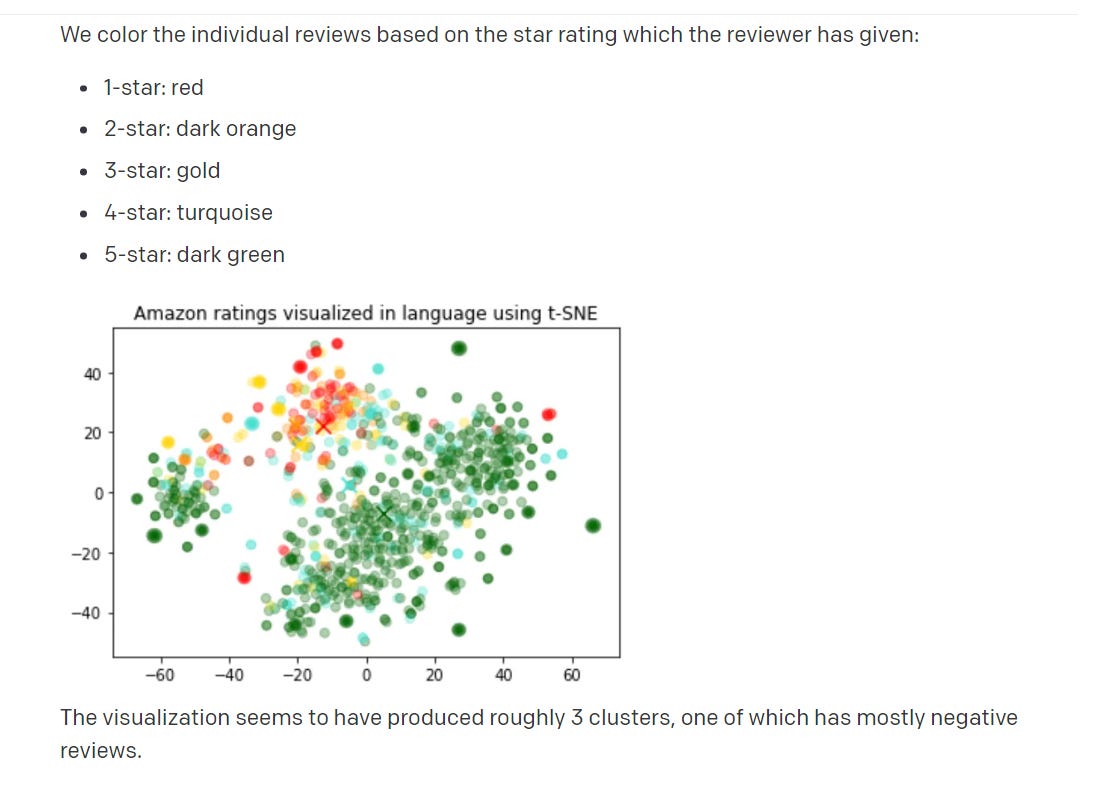

In the OpenAI documentation, you can find an example of a clustering of fine-dining reviews. The dataset is a subset of the Amazon dataset on fine-dining reviews.

The resulting embeddings of reviews are high-dimensional, so to visualize it, a tSNE dimensional reduction algorithm is applied to obtain a 2-dimensional representation.

Embeddings provide a dense representation of tokens and their meanings in a geometric space. However, this representation can be lossy, resulting in faulty conclusions. The accuracy of embeddings depends heavily on the quality of data used in the training set.

The significant advantage of a geometric representation of texts and concepts is that it facilitates fast connections similar to those employed by humans in Kahneman's System I or Fast Thinking, which includes intuition. These connections are established geometrically, rather than through a deliberate reasoning process.

This presents an exciting prospect of utilizing embeddings in Personal Knowledge Management (PKM) systems. Concepts, ideas, and notes can be vectorized and embedded, and proximity is automatically determined.

In such a PKM system, streams of ideas or Folgezettel can be tracked in space, allowing for seamless continuation.

Wardley Maps are simply a specialized type of manual embeddings when viewed from this perspective.

Issues with premature categorizations can be resolved by programs that search for hyperplanes containing major clusters or by using Principal Component Analysis (PCA) or other dimensional-reduction algorithms.

Embeddings can also aid in idea generation and creativity, which will be explored further in a future post.