Dimensional Reduction

The Manifold and Sub-Manifold Hypotheses

Life, whether natural or artificial, evolves through two opposing yet complementary processes: increasing complexity and increasing simplicity. The former involves adding more elements, variations, and interconnections within a system—imagine the intricate branching of a tree or the complex interactions within an ecosystem. This process, known as complexification, often leads to specialization, the emergence of hierarchies, the creation of new and diverse forms, and the breaking of symmetries.

Conversely, the latter process seeks to uncover underlying patterns, dimensional reduction, and create simplified abstractions of reality. This might involve discovering a unifying principle that explains seemingly disparate phenomena or developing a concise mathematical model to represent a complex system.

First, let us try to understand dimensional reduction, what it is and what it is not. Humans naturally perform dimensionality reduction when perceiving and reasoning about the world. For instance, we don't process every pixel of an image; instead, we abstract key features like shapes, colors, and patterns.

What is dimensional reduction.

Dimensional reduction is a foundational strategy for navigating complexity. It addresses the “curse of dimensionality” problem, where the number of possible solutions grows exponentially as the number of variables or dimensions increase linearly.

However, dimensional reduction serves not only to counter combinatorial explosion; it is also employed to gain insights from data, as illustrated in the examples below.

Dimensional reduction involves transforming data from a high-dimensional space into a low-dimensional space, ensuring that the low-dimensional representation retains some meaningful properties of the original data. The process is inherently lossy, while “meaningful properties” is always context-dependent.

For example:

In genomics, preserving gene expression patterns linked to disease is "meaningful."

In image recognition, retaining edges or textures critical for object classification matters.

In social networks, preserving community structures or influence hierarchies defines relevance.

Some well known dimensionality reduction techniques include:

1. PCA (Principal Component Analysis): PCA maps the original data into a new basis, where the axes are chosen based on maximal variances in the original dataset. The first few components will have most of the variances of the whole data. PCA is widely used for simplifying data, reducing noise, and visualizing high-dimensional data. However PCA is a linear technique.

2. t-SNE (t-Distributed Stochastic Neighbor Embedding): t-SNE is a non-linear dimensionality reduction technique primarily used for data visualization. It maps high-dimensional data to a lower-dimensional space (usually 2D or 3D) while preserving distances in the data. t-SNE is particularly effective for visualizing clusters and patterns in complex datasets.

3. UMAP (Uniform Manifold Approximation and Projection): UMAP is another non-linear dimensionality reduction technique that focuses on preserving both the local and global structure of the data. It is often used for visualization and clustering tasks. UMAP is known for its speed and ability to handle large datasets while maintaining meaningful representations.

However, the idea of dimensional reduction is much more general than represented by these three algorithms. Dimensional reduction is not merely a computational trick but a fundamental mechanism for making sense of complexity across disciplines. Humans routinely use it to summarize stories into themes and to distill events into historical narratives. Scientific modelling is also a kind of dimensional reduction. Abstraction in art reduces visual complexity while maintaining the symbolic or emotional resonance.

Some suggested that dimensional reduction is about discovering often hidden patterns in the data. By projecting data into lower dimensions, we often uncover latent structures:

Unsupervised Learning: Methods like autoencoders or manifold learning reveal intrinsic geometries (e.g., clusters, hierarchies) that define relationships between data points.

Causal Inference: Simplifying variables to their most influential drivers (e.g., via sparse regression) can expose causal mechanisms.

Human-Interpretable Insights: Reducing dimensions to 2D/3D visualizations allows humans to "see" patterns (e.g., customer segmentation in marketing).

In these examples, pattern discovery depends on aligning the reduction process with the type of pattern sought. For instance, PCA prioritizes global variance, while UMAP emphasizes local topology. The choice of method encodes assumptions about what constitutes a meaningful pattern.

But there is an important nuance here: dimensional reduction does not always produce "patterns" in the strict sense of recurring or repeating structures. While dimensional reduction often reveals structure (e.g., relationships, hierarchies, or gradients), not all structures qualify as "patterns" if we define patterns as repetitions of a recognizable form. For example, cultural trends, unique outliers are meaningful but lack the repetition inherent to patterns. Dimensional reduction also may reduce noise but does not reveal any structures.

Dimensional reduction is a tool for structure discovery, which may or may not include patterns. Its value lies in preserving contextually relevant relationships, whether repetitive or not.

The manifold and sub-manifold hypotheses

"Understanding the geometry of data is essential for perception. Objects and scenes in the real world often lie on low-dimensional manifolds embedded in high-dimensional spaces." Yi Ma

Real-world data tends to have an intrinsic dimensionality much smaller than the ambient space in which it is represented. This means that while the data may appear high-dimensional (e.g., an image with millions of pixels), the underlying structure is governed by fewer degrees of freedom.

The two experimental hypotheses have been suggested by many people working in data science or machine learning:

The manifold hypothesis suggests that high-dimensional data lies on or near a lower-dimensional manifold, capturing the intrinsic structure of the data. By identifying the underlying manifold, we can reduce the complexity of the data and focus on its essential features.

The sub-manifold hypothesis extends this idea by proposing that data can be segmented into distinct sub-manifolds, each representing a subset of the data. Sub-manifolds allow intelligent systems to categorize and reason about data more effectively.

For example in PCA, the manifold hypothesis is what justifies prioritizing on the first few components.

Data often exhibits clustering or segmentation into distinct groups, and each group may have its own intrinsic structure (i.e., its own sub-manifold).

Example: The MNIST dataset (784D pixel space) lies on a sub-manifold with intrinsic dimensionality ~3–10.

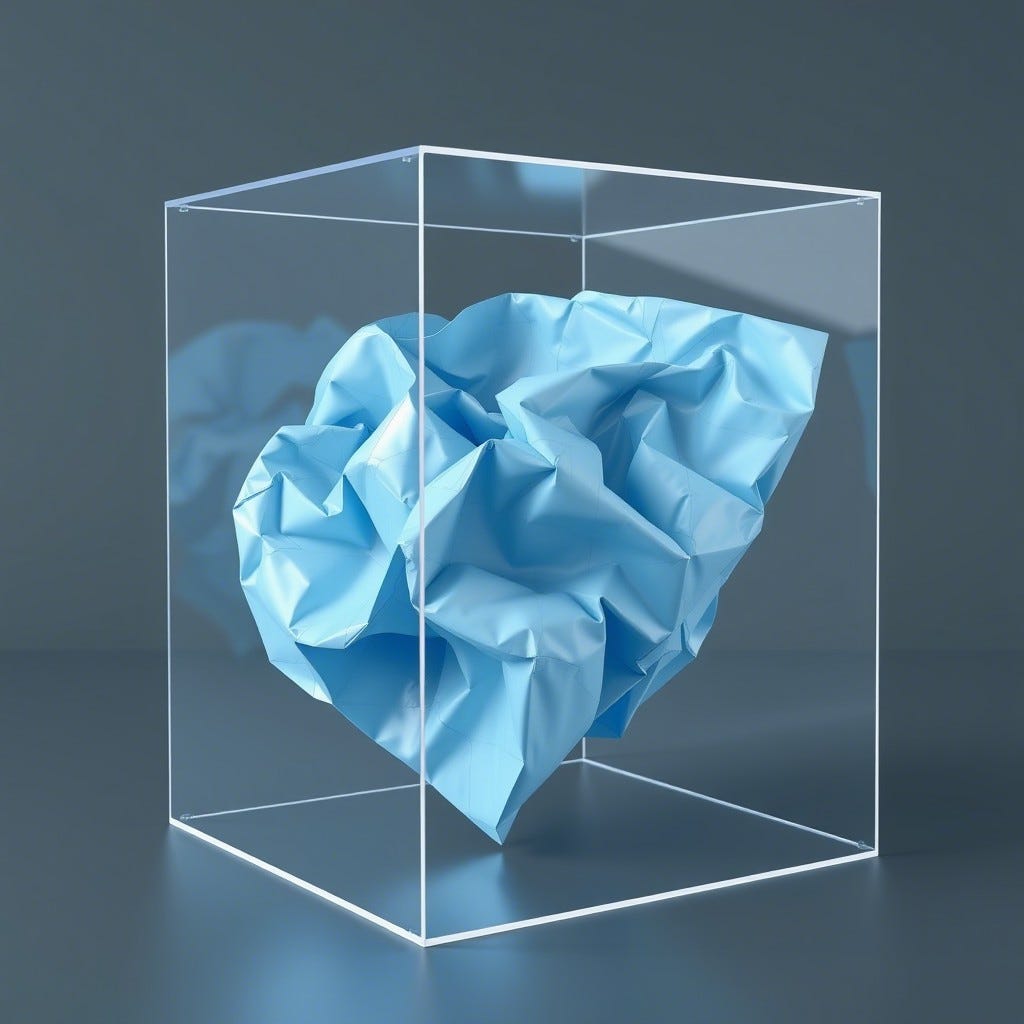

To easily visualize a manifold embedded in higher dimension, picture a sheet of paper crumpled up inside a box. Even though the paper exists in three-dimensional space (the box), its true nature is two-dimensional—it’s still just a flat surface bent and folded in various ways. Similarly, much of the data we encounter in real life has fewer degrees of freedom than it appears to have at first glance. A manifold is topologically equivalent to the Euclidean space for every local section of it, although it may not look like a manifold, it can be smoothen, as long as there are no cuts or tears.

Manifold and sub-manifold hypotheses appear to hold for real-world data. However, these hypotheses may not apply to artificial data unless that data is grounded in simulated physical experiences. The underlying reasons for the validity of these hypotheses remain unknown. Perhaps the complexity we observe stems from our inability to identify hidden patterns within the data. The unexpected success of Large Language Models, for instance, suggests that language may possess greater regularity than previously assumed.

The dimensionality of the input space is called the ambient dimension. Manifolds within this space have a lower dimensionality, referred to as the latent dimension. However, the latent dimension is not necessarily the intrinsic dimension, which represents the theoretical minimum dimensionality as defined by Kolmogorov complexity. This distinction is important: while the intrinsic dimension is typically computationally intractable, dimensional reduction algorithms can efficiently determine the latent dimension. Furthermore, dimensional reduction aims to preserve meaningful properties of the original data in the lower-dimensional representation.

In short, dimensional reduction seeks to minimize the latent dimension while maintaining computational efficiency and contextual relevance. Identifying these meaningful subspaces reveals previously hidden patterns that contribute to our understanding of the data.

The next post will explore the role of dimensional reduction in learning and intelligence, drawing on Yi Ma's concepts of Parsimony and Self-Consistency, as well as closed-loop transcription.